As a forecaster of future events I am a reasonably good columnist, which is a sarcastic way of admitting I’ve got no particular skill at assessing risk and evaluating odds. And I’m confident that trend will continue. It takes no particular skill to observe that forecasting future developments is much in demand now, and always, from people who are stuck in the present with their fear, boredom, anxiousness, desperation, greed, or any of the typical human afflictions or defects that cause us to wonder when and how our circumstances may change.

There are business opportunities in forecasting, of course, opportunities derived not from the skill of a forecaster but from the needs or desires of countless potential customers. People will pay for forecasts in any number of forms, including investment advice, stock tips, betting lines, even fortune tellers. We may snicker at the judgement of anyone paying bookies or psychics for information, but almost no one is immune to curiosity, and the desire to know what to expect.

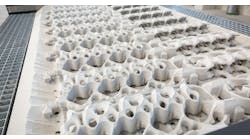

Most businesses have their own “need to know” too, just as individuals do, and while the custom of maintaining staff researchers to keep track of pertinent developments is less common than it once was, in recent decades the focus of forecasting has turned to modeling: relying on data analysis to determine the future. Modeling is especially common in process industries now — including foundries and diecasters — letting computers assume the risk of being wrong.

“Modeling” turns forecasting from a skill to a science, setting rules and applying standard principles that may command some respect from doubters and add some assurance to the forecast conclusions. Models are built on basic assumptions about the conditions of a particular question, setting boundaries or limits to the conditions or variables that may be considered and establishing the range of conclusions that may be reached. A model for a process is reliable enough to reveal that much of the future which we define for it to do, but no more.

Importantly, the responsibility for a model’s reliability rests not with the model, but with those who define the model — and those that use the model to justify their choices. That means “us,” if we rely on models for our decisions. The ways and limits of modeling may not inform our futures, but they are likely to illuminate some things about ourselves if we’re honest or humble enough to take the lesson.

In the ongoing pandemic, models have been given a heightened salience — not because modeling has become better but because many more people, many millions more, suddenly have turned to models of communicable disease to inform us of what we may expect to happen in the spread of COVID-19. And, for the moment, virtually every model — or, everything we have learned was predicted by every model — has failed us.

In particular, the models offered by respected research teams at the University of Washington’s Institute for Health Metrics and Evaluation, Imperial College London, and Oxford University, as well as the U.S. Centers for Disease Control, are now the object of public derision because each made dire predictions for millions of deaths during March and April: no one wants those deaths to have happened, but many people want their own credulity restored.

And the resentment is not reserved for the models or the modelers, but for all those public officials and business leaders who offered such models as the basis for decisions to impose severe and frequently undue restrictions on individuals’ freedom to work, to move about, to worship, to assemble, to engage in heretofore standard behavior. Should the models have determined the positive and negative effects of such an outcome?

There is no point to reliving all the miscalculations. There is real value in recalling that during the weeks of those models’ ascendance virtually everyone willingly surrendered his or her rights to the cause of containing the pandemic, of easing the demands on those working to contain its spread. We wanted the assurance that something could be done — was being done — and the models (and those invoking the models’ indications) delivered that assurance.

Models, and scientific conclusions more broadly understood, have been adopted as a substitute for reasoning, but the need to make decisions remains untouched by science. It’s the doubts and shortcomings in ourselves that need to be addressed and resolved, and providing that resolution is not the work of science. If we want better results we need better decisions, and thus better data sets. We would learn a lot more about the future if we’d work harder to understand human nature, starting with ourselves.